- Data analytics

From AI Lab to AI Factory: What you should keep in mind

- •

- Kristina Schreiber

– ABSTRACT

AI Factories act as operating platforms

– Fast Lane

In this article you will learn:

How exotic are AI ecosystems in the banking and insurance environment?

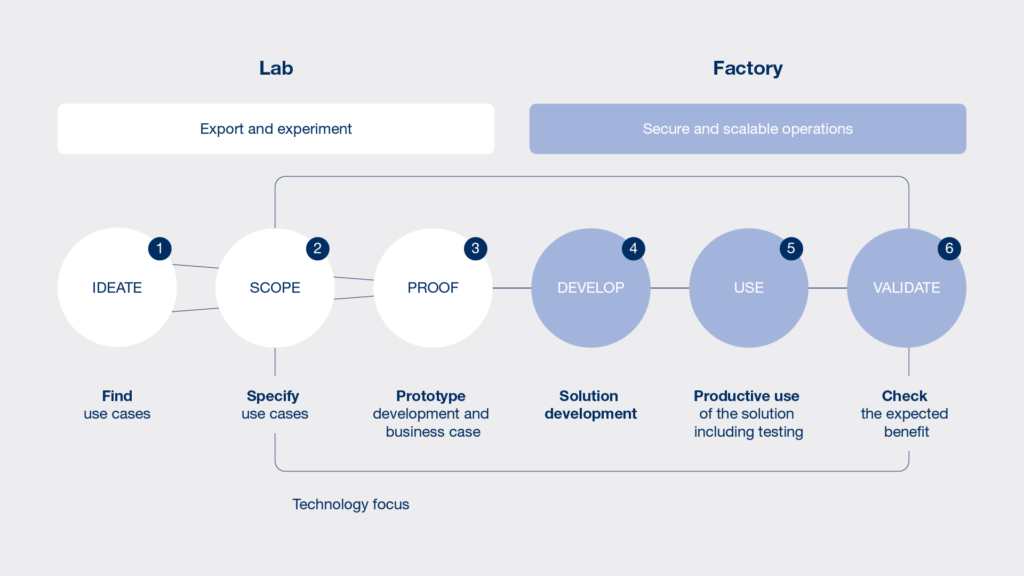

Thomas Löchte: Many banks and insurance companies have already implemented use cases with artificial intelligence - for example, in claims settlement, customer analytics, or to improve dark processing. Algorithms for new business models have been developed. However, most of this activity has been in the experimental stage, in a "lab" environment and has not yet been productively introduced into the "factory" (see graphic).

What does the success of an AI factory or a platform that operates multiple AIs depend on?

Ali Rahimi: You need use cases that target strategic goals, such as the planning status of an AI initiative over the next five years. And you need to have an overview of your data situation: What information is already in your data sets? What target variables help answer marketing or risk questions? How can knowledge be created? In the Lab, we test whether a use case works. We experiment with technologies and data to derive relevant business cases.

Löchte:If the business case is positive, productive use is initiated in a Factory: In the factory, you operate solutions, monitor forecast model performance and key metrics.

Rahimi: I see the current development as an intermediate stage between lab and factory: Artificial intelligence has the character of a lab in its early stages. Data models change continuously and have to be adapted to reality again and again. Once the models are running productively via Docker or Kubernetes, there are millions of requests and mass data deliveries through integrations. Above all, the models can only be repurposed at great expense. As with any "living" software, machine learning operations approaches - MLOps - such as MLFlow or Kubeflow help in the continuous improvement process. *

Löchte: Experts still argue about whether to optimize in the lab or in the factory. On the one hand, it is important to distinguish between the lab situation, which can experiment with new topics - quickly, by the way - and the "IT factory floor" on the other: Ideally, different AIs operate in a factory environment in secure and scalable operations.

The challenges are certainly manifold ...

Löchte: Exactly. Those who support change must not only integrate these models technically, but also support the change in their usage. Only in this way can customer analytics data, which otherwise threatens to get bogged down in specialist departments, also be used across the board. Cross-divisional purchase probabilities can be used more intensively and valid data contributes to greater efficiency in the sales area.

Stephan Roth: With SAP Hana, real-time evaluations are possible - for example, for sales management based on collection and disbursement data - which previously required time-consuming calculations. In any case, there are opportunities to design products more innovatively on the basis of knowledge and experience. In relation to the core systems of insurance companies, SAP tools and products can be usefully supplemented for this purpose.

What are the requirements, dos and don'ts on the data analytics side for banks and insurance companies?

Speaking of changes ...

Rahimi: Driving behavior, for example, changes over the years, so the models have to be adapted to these changes in order to deliver correct results over the long term.

Löchte:The fatal thing is that errors often take root slowly and go almost unnoticed, causing models to degenerate insidiously.

Keyword learning as an important AI task. What role does transfer learning play here? And how far along is the market?

As more of these use cases emerge: What do you recommend when managing different AI solutions within a factory?

Löchte:First, I advise use case management, which evaluates ideas in the lab. It examines the extent to which usable knowledge can be generated from data in terms of corporate strategy and the derivation of fields of action. Second, you need to build the Data Science domain and expertise in-house. Third, data access for collecting and preparing information should be made simple - for example, through Datalake solutions - which make raw data available.

Rahimi: It's true that insurance companies are sitting on enormous amounts of data. Nevertheless, they cannot avoid using external data sources in their calculations, such as statistical information on mortality, birth rates, weather and financial market developments such as regionality, building structures, garden share and many more data points. Holistic integration platforms that make social media data, geoinformation and other external data available and processable in their factory almost in real time combined with inventory information are another key success factor.

Roth: Internal and external data help to identify where consumers with customer potential live, where insurers should expect storms and increased risks from environmental catastrophes, and where special products are possible due to existing conditions. Outstanding payment transactions can be identified easily enabling institutions to take remedial actions. Things get really interesting when banks and insurers forecast what individual needs are necessary for handling outstanding accounts.

Which solution approaches help to run AI applications in a factory?

And in the second step?

Löchte:All banks and insurers want to scale their AI challenges for their specific needs. However, setting up AI solutions on legacy systems is more complex.

Roth:You also can't process real-time data with an old host that is batch-based. On top of that, if there are multiple systems in-house, batch processing slows down workflow controls even more.

Löchte: The market offers various approaches for operating proven data models, for example as workflow or container-based platforms (see table): Programming in Python or R, for example, is packed into containers on an AI platform and put into production. But we also like to work with solutions that provide graphical interfaces, such as Microsoft ML Studio or DataIku. You can use them to map many AI models on platforms in real time and, for example in the collection process, always show the "next best action." The future opportunities for these approaches are significant. It is important to find the use cases that are right for the company and to implement technologies that the employees have mastered. In this way, more and more business potential can be exploited.

Solutions |

Advantages |

Disadvantages |

|

Stand-alone solutions search for the best algorithm or forecasting model: |

Applicable for model selection and optimization | Supports data preparation and feature engineering only rudimentarily |

| Easy access also through cloud solutions | Pure predictive modelsfor Supervised ML, no apps etc. | |

| Code and UI can be used | Fails to deliver on marketing promises: Data Scientists are still needed | |

| Workflow-based platforms offer users not only the option of programming, but also graphical interfaces - for example, to model processes. In this way, they address a wider range of users: | Good support of the entire development

Workflow |

Vendor lock-in for some platform offerings |

| Graphical developmentpossible, programming knowledge not mandatory | Low Acceptance among programming-related data scientists | |

| Containerization and connection to computer clusters possible (partially) | Special requirements not always feasible | |

| Good collaborationbetween stakeholders | ||

| Container-based solutions mostly offer classic code development approaches with a cloud focus. Deployments are made via Git or other version management and container building for transfer to the corresponding system environments. Originally, this approach comes from classic software development. The disaggregated packaging of an AI application into multiple containers (modularization down to the model level) helps to facilitate operations for IT: | High flexibility through customized tool stack | In-house services (possibly software development) required for platform set-up (no SaaS) |

| Good integration into existing infrastructure | Heterogeneity requires more expert knowledge for infrastructure and solutions | |

| Loose coupling of components reduces technology risks | Maintenance of solutionscan be more extensive (due to heterogeneity) | |

| Good integration with existing CI/CD and change processes |

Footnotes

- Machine learning operations approaches: https://github.com/mlflow/mlflow bzw. https://github.com/kubeflow

- Pre-trained AI: free of charge, for example at https://huggingface.co/models or for a fee at Azure (https://azure.microsoft.com/en-us/services/cognitive-services/#features) or AWS Marketplace (https://aws.amazon.com/marketplace/solutions/machine-learning/pre-trained-models)

Operating AI Platforms. You can download the interview and the table as a PDF in German, here. The contents were published as a guest article in Sapport Magazine 06/21.

Contact Person

Ali Rahimi

Chief Product Owner

Dock Finsure Integration

info-finsure@ikor.one

+49 40 8199442-0

Thomas Löchte

Managing Director

Informationsfabrik GmbH

communications@ikor.one

+49 40 8199442-0

Stephan Roth

Chief Product Owner

Dock Assurance

assurance@ikor.one

+49 40 8199442-0

Kristina Schreiber

Communications Manager

Anchor Circle Governance

communications@ikor.one

+49 40 8199442-0